Given the current state of affairs in Japan’s nuclear facilities, I thought it would be good to do a quick analysis of what’s going wrong and why the officials on the ground act as they do (based on very limited information that’s trickling in via the news sources). As of today (morning of March 14th), we have two reactors that have experienced explosions, partial core meltdowns, and multiple other failures. I’ve put together data from the news with failure analysis for an alternative view of the ongoing nuclear crisis in Japan.

Arial view of the damaged Fukushima Daiichi nuclear plant in Okuma, Japan, on Monday, March 14th, 2011.

Like many aspects of usability, FAA (Federal Aviation Administration) was the first to develop practical understanding of Information Awareness and Failure Analysis—pilots and airplane designers what to minimize errors in flight and understand failure when it happens. Like the rest of the world, I’m extremely grateful for their insight into these two aspects of systems design and usability. Below is a quick introduction to the basics.

Information Awareness

Information Awareness is a wonderful term that describes the state of user’s knowledge of the problem at any particular time. This means that Information Awareness changes in time and from person to person. For designers of a complex system that aims to minimize human errors, this means delivering just the right information at the right time to the right individual to support decision making during problem solving given the constraints of the human cognitive capacity. This is not an easy task.

Delivering the right information implies that designers know what’s important at all points during crisis. But this is clearly a fallacy (as can be seen in the design of the nuclear reactors, more to follow). Dumping an overload of data on a decision maker in crisis is not productive either. Cognitive overload leads to informational blindness not awareness.

Similarly, knowing the right time to show some critical bit of data and knowing whom to show it to is extremely difficult. It’s easy to notice mistakes AFTER the accident: “He should have gone left not right.” But it’s important to understand that just like pilots don’t want to crush their planes into the ground, nuclear plant operators don’t want to cause a meltdown. People want to do the right thing, the designer’s job to make it easier for these individuals to identify good choices in a an emergency.

“Operators are the inheritors of bad design.”—James Reason.

Failure Grid

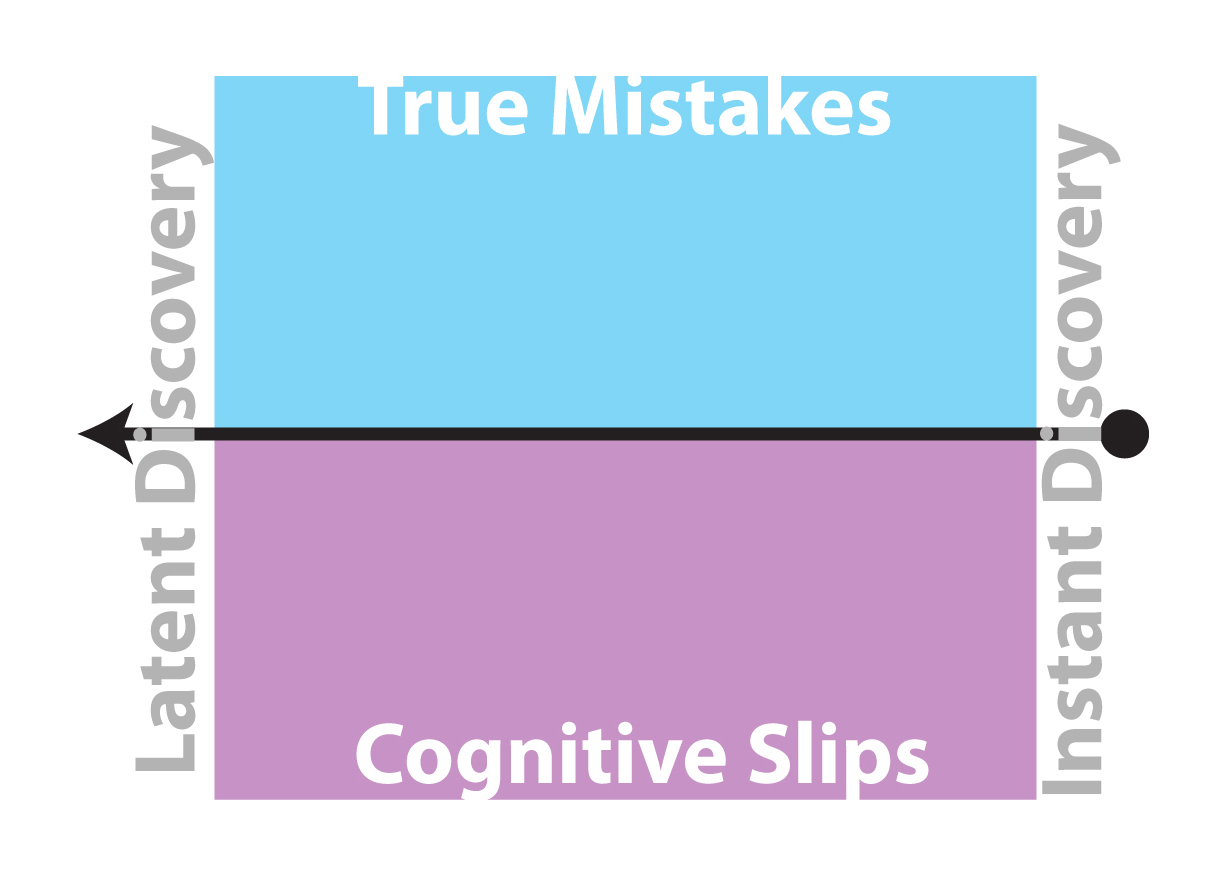

Just like I did for other aspects of design, I find a careful taxonomy of possible failures a good way to proceed. So here’s a quick break down:

- Purposeful actions leading to failure: these are true mistakes—you’ve evaluated all the information you’ve thought was important and made up your mind to act in a certain way. Unfortunately, this was not the correct course of action and leads to failure.

- Accidents: these are cognitive slips—you’ve missed some critical bit of information (just didn’t notice it) or you’ve accidentally hit one lever (or pressed one button) when you’ve really meant to do something else. The result is a failure.

The important difference between these two scenarios is intentionality—in one case you’ve thought about and did just as you’ve plan; in the other case, you meant to do something else. Either way, failure.

There’s also a difference in when the error was discovered:

- Instant Awareness of the mistake: you made an error but the system instantly notified you of resulting failure. As designers, we want to make sure that when errors are made, they are discovered right away. Of course, it’s better to prevent mistakes in the first place, but instant awareness of a problem is the next best thing.

- Latent Discovery of error: you made a mistake and are blissfully unaware of a problem. This is bad—it’s easier to correct the course of the asteroid on a collision course with Earth when it’s far far away. When it comes to failure, time is of the essence.

By creating a grid of time of discovery and intentionally, we can plot any failed decision. Note that the longer it takes to discover the problem, the more problematic it gets.

In general, latent errors stem from system designers and architects. While instant errors arise from practitioners and operators. Now we can look at the nuclear disaster in Japan.

Disaster at the Fukushima Daiichi Nuclear Plant in Okuma, Japan

Here are few basic facts as provided by Wikipedia. Fukushima Daiichi Nuclear Plant is located on the Eastern (and more seismically active) coast of Japan. It is a series of six boiling water reactors. The heat from nuclear fission reaction heats up the water, produces steam, which turns the turbines, which generate electricity. The basic system was designed by Idaho National Laboratory and General Electric in the mid 1950s. The plants in Japan were built from 1967 to 1971. The life span of this nuclear facility was intend to be 25 years. At 40 years of operation, these plants were scheduled for decommission. Only 3 of the 4 coastal plants were operating on March 11, 2011 (see areal view above); the other (plant # 4) was apparently down for maintenance.

As all nuclear plants, these were build with several failure contingencies. Here’s a short list:

- The plants were built to withstand an earthquake of 7.0.

- There was a seawall around the facility in case of a tsunami.

- In an earthquake, the plants had a SCRAM procedure, which executed an emergency shutdown of the nuclear reactors.

- Electrical pumps were designed to pump cool water to the container to keep the rods submerged (the rods are hotter AFTER the shut down than during regular operation of the plant).

- There were back-up batteries for running the pumps in an emergency that were designed to last about an hour or so.

- There were diesel fuel pumps that were available to take over the pumping when the batteries ran out of juice.

- There are vents built into the system to release steam from the reactors if the pressure inside got too high (this, of course, releases radio active material into the atmosphere).

So here are a few things that went wrong caused by True Mistakes & Latent Discovery—or, as we call it, “bad design.”

- The quake was much stronger than the design specs: 9.0 according to Japan (8.9 according to USGS). This is thousands of times stronger than what the plant was designed to withstand.

- The seawall was too short: the tsunami easily overflowed the water barrier, flooding the basements of the plants (multiple plants).

- The diesel fuel backup generators were placed at the low ground outside the facility—again this was a design decision based on over-confidence in the seawalls. These backup generators were wiped out by the tsunami.

- The controls for the emergency pumps were located in the basement of the facilities (again, the seawalls gave designers false sense of security). Once flooded, the pumps couldn’t be easily operated. So even when the new backup generators were delivered to the facility, they couldn’t be hooked up to the pumps.

With all of the above, the failures were caused by true mistakes, not cognitive slips—the designers designed the plant this way. They’ve made decisions based on what they believed was the correct thing to do at the time—not one designer wanted to create a plant that would suffer a meltdown. This is not about sabotage, this is about poor design choices. And of course, their government approved the location of the plant: right at sea level, on the Eastern side closer to the Ring of Fire, right next to population center of over 1,000,000.

So now we can look at the cognitive slips and true mistakes made by the nuclear plant operators during the actual emergency.

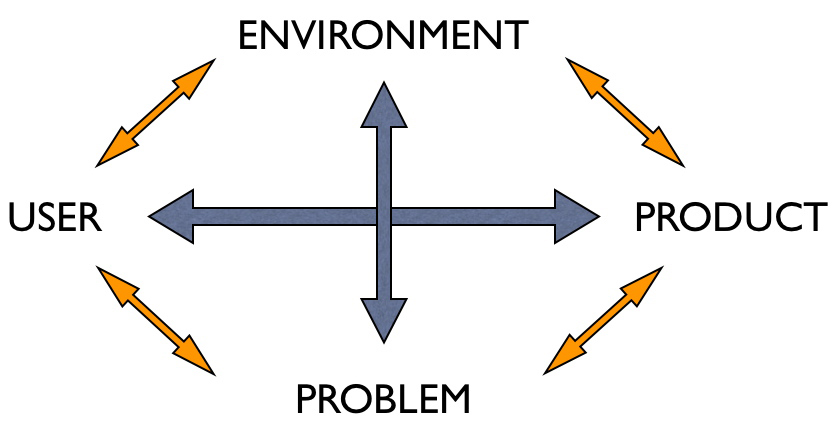

Error Ecosystem

Errors don’t happen in a vacuum. Nuclear plant operators work hard, but rarely have to face a real life emergency situation (thank goodness for that!). Drills are one thing, real emergencies always present unexpected circumstances: flooded basements, loss of pumps, useless generators. While there might have been contingencies for which these people prepared, the combination of multiple failures was not one of the scenarios. We know that because if it was, the generators would have been moved to a higher ground and pump controls would not have been placed in the basement. So plant operators were “winging” it—there was no procedure for deal with this crisis.

The Fukushima operators are using regular fire-fighting equipment to pump water into the containment building to cool the nuclear fuel. They are using sea water and trying to “push” it into the reactor. But the pressure in the reactor is continuously building-up—pour water onto super hot rods; the water evaporates; the steam builds up, increasing pressure; more water can’t be added (it’s like filling a full helium balloon with water; the steam needs to be vented to prevent explosion and to lower the pressure in the container; more water added to cool the nuclear fuel…

A stressed mind doesn’t work the same as a relaxed and calm mind (that’s why so many people do worse on tests than their knowledge level and ability would indicate). The working memory is restricted by stress, leaving less cognitive capacity for the actual problem solving it needs to do. The more stress there is in the environment, the less capacity we get to deal with it—we get cognitive overload. And 9.0 earthquake with a tsunami and possible core meltdown qualify as a stressful environment. This is a direct path to cognitive slips.

But environment is not only restricted to the actual catastrophe. These nuclear plants cost a lot of money. Decision makers had to consider the possible long-term cost of their actions: if we flood the plant with sea water, it will never be operational again. So on top of realizing that there might be a lot of damage and a lot of people might die, the operators had economic constraints as well—but no pressure…

And as with any interaction with a complex product (nuclear plant, in this case), some consequences become unpredictable, some states invisible to the operators. This can cause true mistakes—operators lose their information awareness; they don’t fully grasp what’s going on; they make decisions with incomplete data. So adding sea water with fire hoses damaged the water level indicators, turning the job of keep ing the rods under water into an educated guess. Similarly, the vents were never designed for this—they are no longer working. On top of this, there are crack in the containment building that are letting the water escape and thus farther exposing the rods…

The pressure is building up, the Fukushima operators have to invent new procedures for containing the meltdown…

We can see this in a simple error ecosystem diagram: operators, environmental conditions, product design, and current problem all interact to produce a very complex ecosystem. (Also see “Information Scaffolding”)

As of now, we still don’t know the extent of the meltdown—we are certain that the rods were exposed (out of the water) and thus are damaged, but how damaged? It’s very difficult to judge. There have been three explosions (two took off the roofs of reactors and one cracked the containment case): reactors 1, 2, and 3 have been critically damaged. Plants 1 and 3 lost their roofs. Most of the emergency workers had to be evacuated due to high level of radiation.

“No. 4 is currently burning and we assume radiation is being released. We are trying to put out the fire and cool down the reactor,” the chief government spokesman, Yukio Edano, told a televised press conference. “There were no fuel rods in the reactor, but spent fuel rods are inside.” (from the New York Times article quoted below.)

This nuclear accident is already in the top three nuclear disasters of all time: Three Mile Island, the Chernobyl, and now the Fukushima. Let’s hope it’s not the worse!

The best description of the current state at the Fukushima Daiichi nuclear plant can be found in the New York Times: “Japan Faces Potential Nuclear Disaster as Radiation Levels Rise.”

3 comments for “Information Awareness & Failure Analysis”