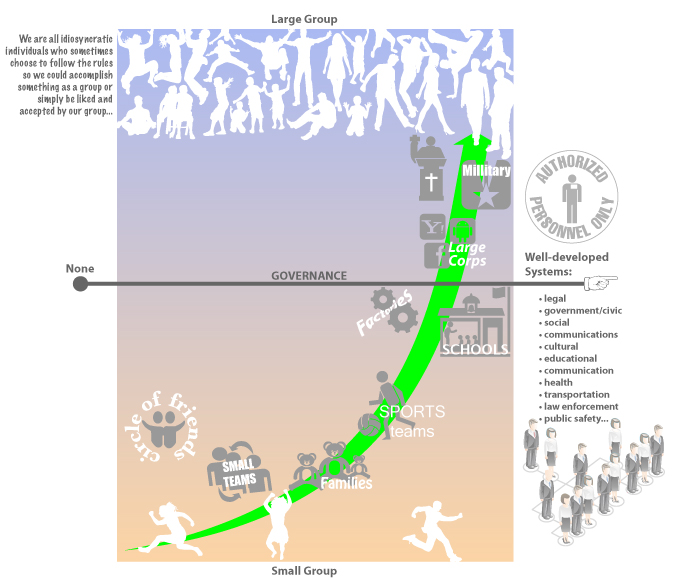

One of the areas of discussion at the NIH Citizen Science Engagement Think Tank meeting last month was how to categorize the roles (and thus rules of engagement) for citizen scientists. There was a continuous pressure to call individuals who “donate” their medical data to scientific research patients. Let me start by saying that I find that unacceptable — aside from the fact that every human being on Earth has been or will be a patient at some point in their lives; the label patient implies a lower level on the hierarchy than doctor or scientist. The whole point of citizen science initiative is to break down the barriers to entry — we are ALL scientists! Being a scientist is not measured by the number of years in school or diplomas on the wall. It is the willingness to do science that is key. Thus we can all be scientists. With that said, what follows is the discussion on group dynamics — how do people work in groups and how we can support productive scientific endeavors through good design and social engineering. Think Different Collective Groups of people are not made up of homogeneous people — we are all idiosyncratically…