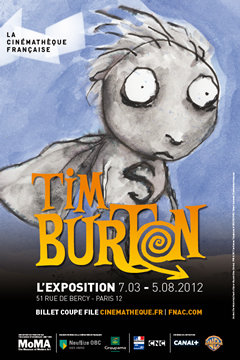

Man-made Disasters in a Wake of Tsunami This month, The Fukushima Nuclear Accident Independent Investigation Commission issued its final report on the disaster: It was man-made! Here’s a quote from the report: What must be admitted — very painfully — is that this was a disaster “Made in Japan.” Its fundamental causes are to be found in the ingrained conventions of Japanese culture: our reflexive obedience; our reluctance to question authority; our devotion to ‘sticking with the program”; our groupism; and our insularity. Had other Japanese been in the shoes of those who bear responsibility for this accident, the result may well have been the same. The last sentence is particular insightful — the blame was not rested on the shoulders of a particular individual, as tempting as that might be, or even on the shoulders of some manager. The fault was places on the cultural context in which the incident played out. Museums in Paris We just got back from seeing a Tim Burton exhibit at the La Cinémathèque, in Paris. The content of the exhibit, as one could imagine, is quite wonderful. But there were many, many human failures in making the visit an enjoyable experience. And yes,…